New blog backend

Recently I changed the way this website is hosted, served and deployed. I’ll describe what I changed and why I did so.

The topics I’ll discuss:

- Hosting: I moved from a RamNode virtual private server (VPS) to one from DigitalOcean.

- Serving: I am now using Docker containers to serve this website.

- Deployment: The code behind this website has moved from GitHub to GitLab and I am using their CI/CD to build the Docker image for this site.

- Performance: The difference in performance between my old RamNode setup and the new DigitalOcean machine is minimal.

- Future: Looking forward to new challenges.

Hosting

RamNode

I have used RamNode since late 2012. They have been a reliable partner throughout the years. Their support staff always responded quickly to my questions, is very friendly and willing to go the extra mile. I have been a very happy customer.

I have run this blog on a RamNode VPS since April 2013. However, my setup got outdated. I was still running Debian Jessie which also meant that most visitors could not use HTTP/2 with my website. The reason is that the OpenSSL version in Jessie did not support Application‑Layer Protocol Negotiation (ALPN). (For more information about the exact issue, see Supporting HTTP/2 for Website Visitors.)

Time to upgrade to Debian Stretch. And since the machine was provisioned using Puppet, that should have been a breeze, right? Well, sure. If not for two things:

- I had not used Puppet to install Certbot, which is responsible for the TLS certificates, so I needed to figure out again how to install that software.

- I had fallen out of love with Puppet and wanted to convert my code to Ansible.

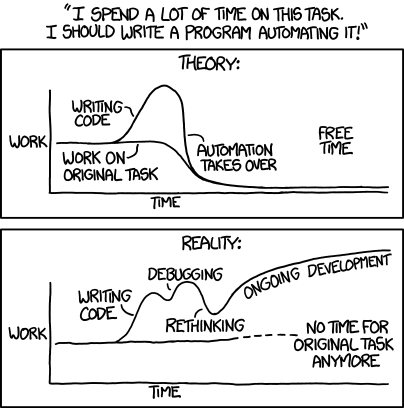

Basically this XKCD comic all over again:

Source: XKCD

Where I previously used Vagant to test my (Puppet) code, I wanted to use Terraform now. The big advantage is that you don’t have to work on a machine similar to what you will have in real production, but you can actually use the exact same machine.

Unfortunately this also meant I could not use RamNode. They don’t offer a “pay-as-you-go” model like cloud providers do. And since I was going to work intermittently on this project, I was looking for a provider where I could create a machine, use it for however long I needed (ranging from a few minutes to a couple of hours) and then destroy it, while only paying for the time I actually used the resources.

Scaleway

This is where Scaleway comes into the picture. Not only do they provide on-demand compute instances, there is also a Scaleway provider for Terraform.

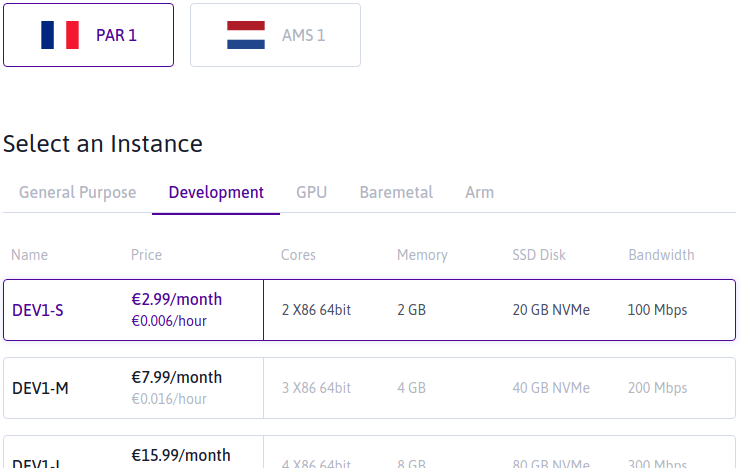

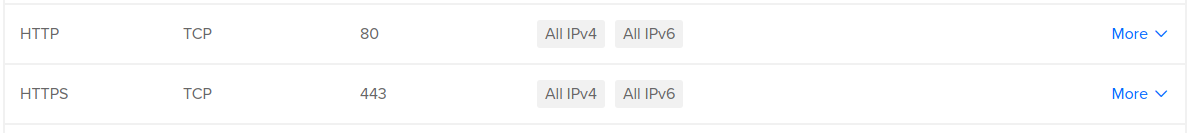

Their pricing is also fantastic. You can get a “development server” (DEV1-S) with 2 cores and 2GB memory for €2.99 a month.

In their Amsterdam data center, you can even get a “Start server” (START1-XS) with 1 core and 1GB memory for as little as €1.99 a month.

(Note that the Scaleway pricing page does not list the Amsterdam offerings so they might align these options in the future.)

For small applications (like this blog) or for experiments these are great machines. Provisioning is quick and besides compute resources, Scaleway offers a bunch of other services (like object storage and virtual firewalls).

Unfortunately I only discovered after migrating this site from RamNode to Scaleway that the Scaleway IPv6 offering is not mature enough for my taste. So I migrated back to RamNode (that is: I changed the DNS records back) and worked on plan B.

DigitalOcean

That new plan was to move to DigitalOcean (referral link). I have used DigitalOcean in the past for a few experiments and liked the experience. They provide a wide range of services, which include managed Kubernetes clusters, object storage and cloud firewalls. There is a DigitalOcean provider for Terraform and the IPv6 support seems to be what I need.

With this snippet of Terraform code:

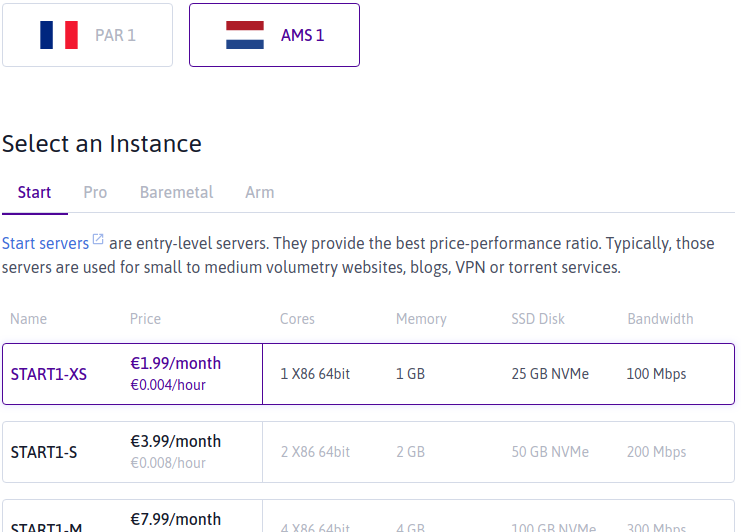

resource "digitalocean_firewall" "webserver" {

...

inbound_rule = [

{

protocol = "tcp"

port_range = "80"

source_addresses = ["0.0.0.0/0", "::/0"]

},

{

protocol = "tcp"

port_range = "443"

source_addresses = ["0.0.0.0/0", "::/0"]

},

]

I can configure my firewall to allow incoming traffic to port 80 and 443 from all IPv4 and IPv6 sources:

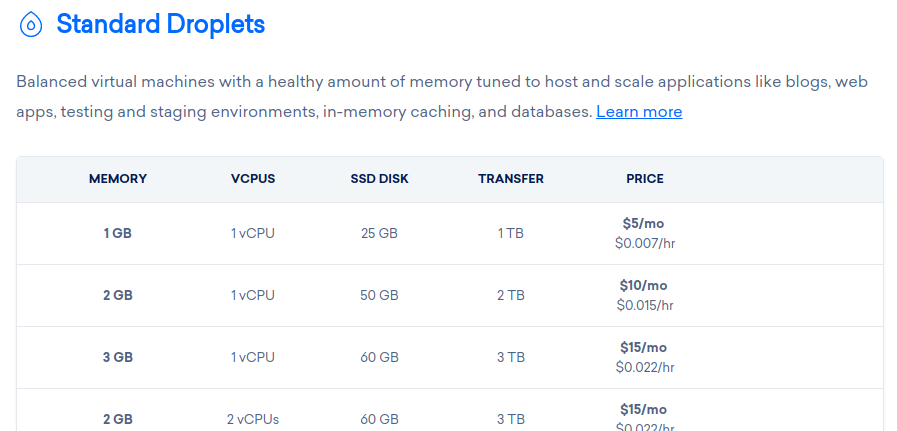

Do note that DigitalOcean Droplets are less cheap than the Scaleway servers. But I still think they provide good value for money.

Source: the DigitalOcean pricing page

With the hosting sorted out, the next piece of the puzzle is the way this website is served.

Serving the content

I used to have Nginx running directly on the machine and it served the static files that make up this website. And that is a perfectly fine setup, it does not have too many moving parts and deployment of a new version can be as simple as copying a bunch of files over to the machine. But…

It wasn’t very exciting to me. And to be honest, that is also part of why I run my own blog instead of using services like WordPress or Medium—I want to tinker with this stuff. (That and the fact that I want to own my own content.)

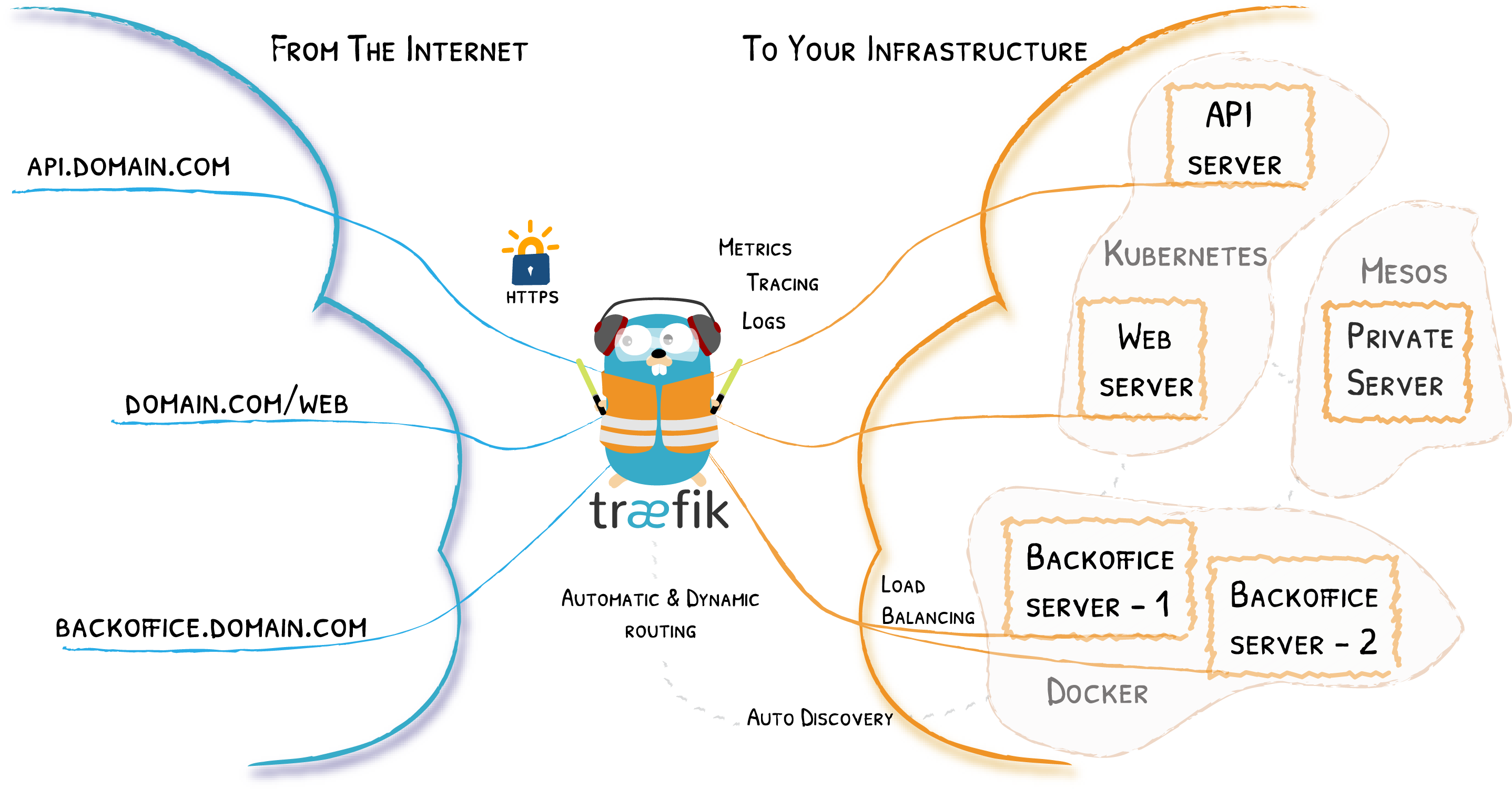

So the new setup is using Traefik as a reverse proxy. The big selling points for me are the fact that it handles the TLS certificates for me (via Let’s Encrypt, which I was already using) and that I can easily add backend services by spinning up Docker containers with the right labels.

Source: Traefik documentation

Which is exactly what I did for this blog. It is now a Docker container with Nginx as webserver. This webserver still serves the static content that makes up this website.

In the future I might spin up additional services (I am thinking about replacing Disqus with something self hosted), and this setup should make that easier.

Note that running the services in Docker containers also means I no longer receive automatic updates via Apt of the services that are exposed to the internet (the reverse proxy and webserver). I will have to keep the Docker images I am using up-to-date myself by either building them myself or regularly pulling recent versions. On the upside: I am no longer bound to the version available in a Debian repository; I can run any version of Traefik or Nginx I like, as long as it is packaged in a Docker image.

A noticeable downside of this setup is that deploying a new version is a bit

more complex. I can no longer “scp” files to the right directory. Instead I

have to supply a Docker image.

Code repository

Enter GitLab. Their service not only includes a Git repository, but also CI/CD and a container registry. In other words: when I push a change, GitLab can automatically build a new image for me.

And that is exactly what I have set up at the moment. When I’m finished with this article, I will:

git pushit to GitLab,- wait a moment for the image to build (about 2 minutes currently),

sshin to my VPS,- pull the fresh image, and

- start a container with the new image.

Like I said, this is a bit more complex than before where I could copy over my static files.

Then again, that is not a completely fair comparison. Compiling the static

files, generating the CSS from SASS and optimizing the

images were things I had to do manually before I could copy over the files. But

because those actions were triggered by a single command (“make publish”) it

feels like it was less complex.

On the bright side: now GitLab is building the content it means I have clean, reproducible builds. There is no longer a risk of unfinished articles popping up in production. (Assuming I don’t accidentally commit and push them…)

Performance

Just like the last time when I switched hosting providers, I was curious about the impact of the change on the performance of this site. Especially because in the new situation Chrome can use HTTP/2 instead of falling back to HTTP/1.1.

For reference: at RamNode I had a standard KVM with 512 MB RAM, 1 CPU core and 10 GB storage on an SSD. At DigitalOcean I am using a droplet with 1 GB memory, 1 CPU and 25 GB storage on an SSD. Both machines are running in a data center in the Netherlands.

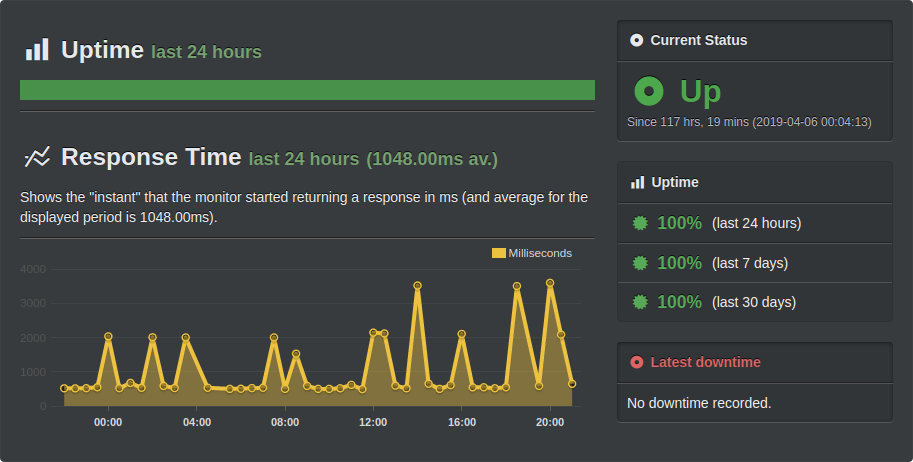

Uptime Robot

I am monitoring my website via Uptime Robot. They can show me the response time of the monitors.

| Old | New | |

|---|---|---|

| Ping (<hostname>.vlent.nl) | 119.56 | 118.14 |

| HTTPS request IPv4 (https://www.vlent.nl) | 523.74 | 523.57 |

| HTTPS request IPv6 (https://www.vlent.nl) | 1048.00 | 505.84 |

These are averages over the last 24 hours. Overall the results are comparable. The only big difference is the IPv6 times, but it looks like it was a bad day for IPv6 at RamNode as you can see in the image below. (If you exclude the spikes you are also in the 500-ish ms range.)

WebPageTest

I also ran a few tests at WebPageTest. I picked a (more or less) random page, my previous post, assuming it is representative of most of the content here: a page with a few images.

I ran two sets of tests. The first one was a run of 9 tests from Dulles, VA using Chrome on a cable connection. The second set was a run of 5 tests from Dulles, VA using Chrome on a Nexus 5 on a 3G connection. The results in the table below are the values reported as the median run.

| Cable/Old | Cable/New | 3G/Old | 3G/New | |

|---|---|---|---|---|

| Load time | 3.228 | 3.052 | 10.993 | 10.947 |

| First byte | 0.638 | 0.488 | 2.368 | 2.145 |

| Start render | 1.100 | 0.900 | 3.975 | |

| Speed index | 1.305 | 1.847 | 4.761 | |

| First interactive | >1.105 | >0.932 | >6.920 | >10.925 |

| Fully loaded | 3.376 | 3.255 | 11.544 | 11.412 |

In these the numbers there are no shocking differences between the old and the new setup. The new site seems to be 100–200 ms faster then the old one though, except for the load time and first interactive time on the Nexus 5 on the 3G connection.

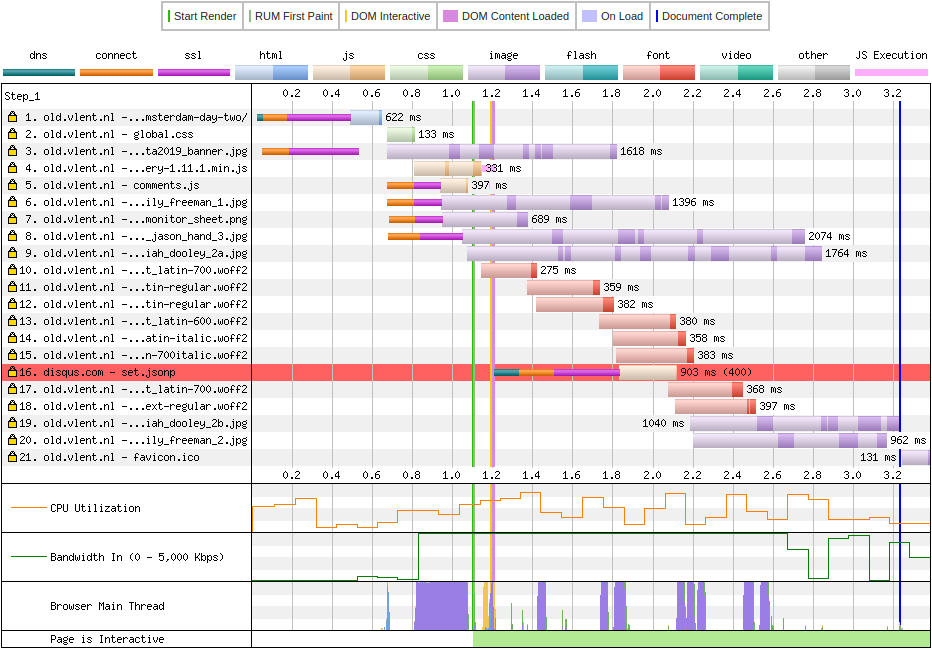

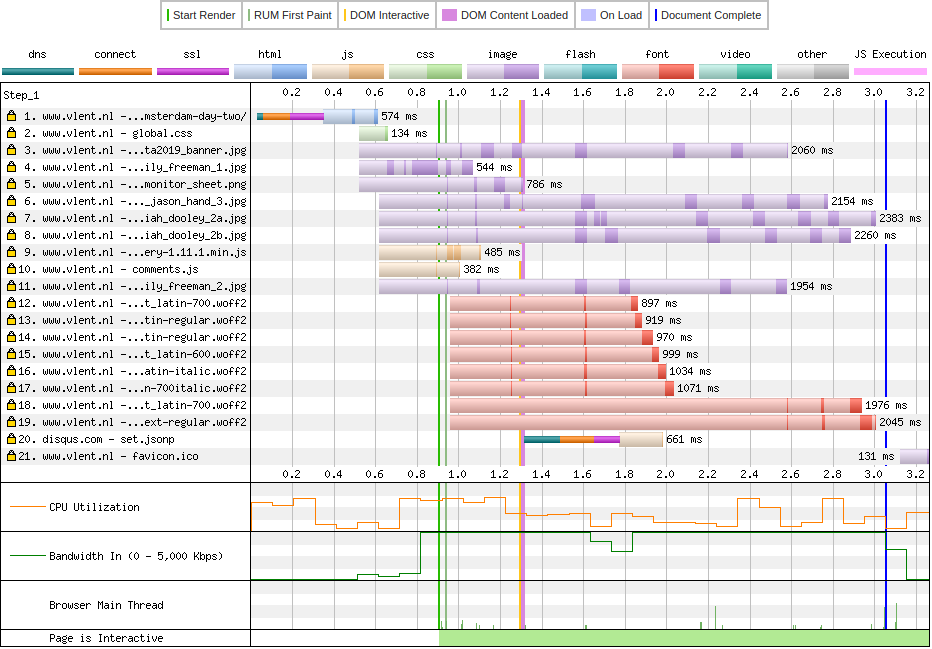

The biggest differences are in the waterfall graphs.

Waterfall view, old site

Waterfall view, new site

You can nicely see the difference between HTTP/1.1 and HTTP/2 here. In the old setup, you can see six separate connections to my site, where the new setup only uses one.

The future

Migrating this site has been blocking me for a while. I felt like I had to do this first before I could start working on other things. Now this is finished, I can pick up those things.

Things like (in random order):

- I would like to replace Disqus with a self hosted solution (Isso?).

- Webmentions look interesting. Perhaps I can use them as well?

- I might even migrate away from Acrylamid. I still like the idea of having a static website though, and I’m looking at Hugo at the moment.

But I might also want to improve the current setup a bit more:

- The current Docker image building process works, but there are a few things I could improve or at least clean up. (Then again: if I move to e.g. Hugo this might not be as relevant anymore.)

- Pulling a new Docker image and restarting the service with the new image is something that I should be able to further (or even fully) automate. Ideally there will no longer be a need for me to log into the server. Pushing the new post to GitLab should be enough.

So plenty of fun stuff to tinker with!